Table of Contents

Introduction

The inverse of a matrix \(A\) is another matrix \(A^{-1}\) that has this property:

\[\begin{align*} A A^{-1} &= I \\ A^{-1} A &= I \end{align*} \]where \(I\) is the identity matrix. This is a nice property for a matrix to have, because then we can work with it in equations just like we might with ordinary numbers. For instance, to solve some linear system of equations \[ A x = b \] we can just multiply the inverse of \(A\) to both sides \[ x = A^{-1} b \] and then we have some unique solution vector \(x\). Again, this is just like we would do if we were trying to solve a real-number equation like \(a x = b\).

Now, a matrix has an inverse whenever it is square and its rows are linearly independent. But not every system of equations we might care about will give us a matrix that satisfies these properties. The coefficient matrix \(A\) would fail to be invertible if the system did not have the same number of equations as unknowns (\(A\) is not square), or if the system had dependent equations (\(A\) has dependent rows).

Generalized inverses are meant to solve this problem. They are meant to solve equations like \(A x = b\) in the “best way possible” when \(A^{-1}\) fails to exist. There are many kinds of generalized inverses, each with its own “best way.” (They can be used to solve ridge regression problems, for instance.)

The most common is the Moore-Penrose inverse, or sometimes just the pseudoinverse. It solves the least-squares problem for linear systems, and therefore will give us a solution \(\hat{x}\) so that \(A \hat{x}\) is as close as possible in ordinary Euclidean distance to the vector \(b\).

The notation for the Moore-Penrose inverse is \(A^+\) instead of \(A^{-1}\). If \(A\) is invertible, then in fact \(A^+ = A^{-1}\), and in that case the solution to the least-squares problem is the same as the ordinary solution (\(A^+ b = A^{-1} b\)). So, the MP-inverse is strictly more general than the ordinary inverse: we can always use it and it will always give us the same solution as the ordinary inverse whenever the ordinary inverse exists.

We will look at how we can construct the Moore-Penrose inverse using the SVD. This turns out to be an easy extension to constructing the ordinary matrix inverse with the SVD. We will then see how solving a least-squares problem is just as easy as solving an ordinary equation.

Example - System with an Invertible Matrix

First let’s recall how to solve a system whose coefficient matrix is invertible. In this case, we have the same number of equations as unknowns and the equations are all independent. Then \(A^{-1}\) exists and we can find a unique solution for \(x\) by multiplying \(A^{-1}\) on both sides.

For instance, say we have

\[ \left\{\begin{align*} x_1 - \frac{1}{2}x_2 &= 1 \\ -\frac{1}{2} x_1 + x_2 &= -1 \end{align*}\right. \]

Then

\[ \begin{array}{c c} A = \begin{bmatrix} 1 & -1/2 \\ -1/2 & 1 \end{bmatrix}, &A^{-1} = \begin{bmatrix} 4/3 & 2/3 \\ 2/3 & 4/3 \end{bmatrix} \end{array} \]

and

\[x = A^{-1}b = \begin{bmatrix} 4/3 & 2/3 \\ 2/3 & 4/3 \end{bmatrix} \begin{bmatrix} 1 \\ -1 \end{bmatrix} = \begin{bmatrix} 2/3 \\ -2/3 \end{bmatrix} \]

So \(x_1 = \frac{2}{3}\) and \(x_2 = -\frac{2}{3}\).

Constructing Inverses with the SVD

The singular value decomposition (SVD) gives us an intuitive way constructing an inverse matrix. We will be able to see how the geometric transforms of \(A^{-1}\) undo the transforms of \(A\).

The SVD says that for any matrix \(A\),

\[ A = U \Sigma V^* \]

where \(U\) and \(V\) are orthogonal matricies and \(\Sigma\) is a diagonal matrix.

Now, if \(A\) is invertible, we can use its SVD to find \(A^{-1}\) like so:

\[ A^{-1} = V \Sigma^{-1} U^* \]

If we have the SVD of \(A\), we can construct its inverse by swapping the orthogonal matrices \(U\) and \(V\) and finding the inverse of \(\Sigma\). Since \(\Sigma\) is diagonal, we can do this by just taking reciprocals of its diagonal entries.

Example

Let’s look at our earlier matrix again:

\[ A = \begin{bmatrix} 1 & -1/2 \\ -1/2 & 1 \end{bmatrix} \]

It has SVD

\[ A = U \Sigma V^* = \begin{bmatrix} \sqrt{2}/2 & -\sqrt{2}/2 \\ \sqrt{2}/2 & \sqrt{2}/2 \end{bmatrix} \begin{bmatrix} 3/2 & 0 \\ 0 & 1/2 \end{bmatrix} \begin{bmatrix} \sqrt{2}/2 & \sqrt{2}/2 \\ -\sqrt{2}/2 & \sqrt{2}/2 \end{bmatrix} \]

And so,

\[ A^{-1} = V \Sigma^{-1} U^* = \begin{bmatrix} \sqrt{2}/2 & -\sqrt{2}/2 \\ \sqrt{2}/2 & \sqrt{2}/2 \end{bmatrix} \begin{bmatrix} 2/3 & 0 \\ 0 & 2 \end{bmatrix} \begin{bmatrix} \sqrt{2}/2 & \sqrt{2}/2 \\ -\sqrt{2}/2 & \sqrt{2}/2 \end{bmatrix} \]

and after multiplying everything out, we get

\[ A^{-1} = \begin{bmatrix} 4/3 & 2/3 \\ 2/3 & 4/3 \end{bmatrix} \]

just like we had before.

In an earlier post, we saw how we could use the SVD to visualize a matrix as a sequence of geometric transformations. Here is the matrix \(A\) followed by \(A^{-1}\), acting on the unit circle:

The inverse matrix \(A^{-1}\) reverses exactly the action of \(A\). The matrix \(A\) will map any circle to a unique ellipse, with no overlap. So, \(A^{-1}\) can map ellipses back to those same circles without any ambiguity. We don’t “lose information” by applying \(A\).

Constructing MP-Inverses with the SVD

We can in fact do basically the same thing for any matrix, not just the invertible ones. The SVD always exists, so for some matrix \(A\), first write

\[ A = U \Sigma V^* \]

And then find the MP-inverse by

\[ A^+ = V \Sigma^+ U^* \]

Now, the matrix \(A\) might not be invertible. If it is not square, then, to find \(\Sigma^+\), we need to take the transpose of \(\Sigma\) to make sure all the dimensions are conformable in the multiplication. It \(A\) is singular (dependent rows), then \(\Sigma\) will have 0’s on its diagaonal, so we need to make sure only take reciprocals of the non-zero entries.

Summarizing, to find the Moore-Penrose inverse of a matrix \(A\):

- Find the Singular Value Decomposition: \(A = U \Sigma V^*\) (using R or Python, if you like).

- Find \(\Sigma^+\) by transposing \(\Sigma\) and taking the reciprocal of all its non-zero diagonal entries.

- Compute \(A^+ = V \Sigma^+ U^*\)

Example - An Inconsistent System

Let’s find the MP-inverse of a singular matrix. Let’s take

\[A = \begin{bmatrix} 1 & 1 \\ 1 & 1 \end{bmatrix} \]

Because the rows of this matrix are linearly dependent, \(A^{-1}\) does not exist. But we can still find the more general MP-inverse by following the procedure above.

So, first we find the SVD of \(A\):

\[ A = U \Sigma V^* = \begin{bmatrix} \sqrt{2}/2 & -\sqrt{2}/2 \\ \sqrt{2}/2 & \sqrt{2}/2 \end{bmatrix} \begin{bmatrix} 2 & 0 \\ 0 & 0 \end{bmatrix} \begin{bmatrix} \sqrt{2}/2 & \sqrt{2}/2 \\ -\sqrt{2}/2 & \sqrt{2}/2 \end{bmatrix} \]

Then we apply the procedure above to find \(A^+\):

\[ A^+ = V \Sigma^+ U^* = \begin{bmatrix} \sqrt{2}/2 & -\sqrt{2}/2 \\ \sqrt{2}/2 & \sqrt{2}/2 \end{bmatrix} \begin{bmatrix} 1/2 & 0 \\ 0 & 0 \end{bmatrix} \begin{bmatrix} \sqrt{2}/2 & \sqrt{2}/2 \\ -\sqrt{2}/2 & \sqrt{2}/2 \end{bmatrix} \]

And now we multiply everything out to get:

\[ A^+ = \begin{bmatrix} 1/4 & 1/4 \\ 1/4 & 1/4 \end{bmatrix} \]

This is the Moore-Penrose inverse of \(A\).

Like we did for the invertible matrix before, let’s get an idea of what \(A\) and \(A^+\) are doing geometrically. Here they are acting on the unit circle:

Notice how \(A\) now collapses the circle onto a one-dimensional space. This is a consequence of it having dependent columns. For matricies with dependent columns, its image will be of lesser dimension than the space it’s mapping into. Another way of saying this is that it has a non-trivial null space. It “zeroes out” some of the dimensions in its domain during the transformation.

What if \(A\) were the coefficient matrix of a system of equations? We might have:

\[ \left\{ \begin{align*} x_1 + x_2 &= b_1 \\ x_1 + x_2 &= b_2 \end{align*} \right. \]

for some \(b_1\) and \(b_2\).

Now, unless \(b_1\) and \(b_2\) are equal, this system won’t have an exact solution for \(x_1\) and \(x_2\). It will be inconsistent. But, with \(A^+\), we can still find values for \(x_1\) and \(x_2\) that minimize the distance between \(A x\) and \(b\). More specifically, let \(\hat{x} = A^{+}b\). Then \(\hat{x}\) will minimize \(|| b - A x ||^2 \), the squared error, and \( \hat{b} = A \hat{x} = A A^{+} x \) is the closest we can come to \(b\). (The vector \(b - A \hat{x}\) is sometimes called the residual vector.)

We have

\[ \hat{x} = A^{+} b = \begin{bmatrix} 1/4 (b_1 + b_2) \\ 1/4 (b_1 + b_2) \end{bmatrix} \]

so \(x_1 = \frac{1}{4} (b_1 + b_2)\) and \(x_2 = \frac{1}{4} (b_1 + b_2)\). And the closest we can get to \(b\) is

\[ \hat{b} = A \hat{x} = \begin{bmatrix} 1/2 (b_1 + b_2) \\ 1/2 (b_1 + b_2) \end{bmatrix} \]

In other words, if we have to make \(x_1 + x_2\) as close as possible to two different values \(b_1\) and \(b_2\), the best we can do is to choose \(x_1\) and \(x_2\) so as to get the average of \(b_1\) and \(b_2\).

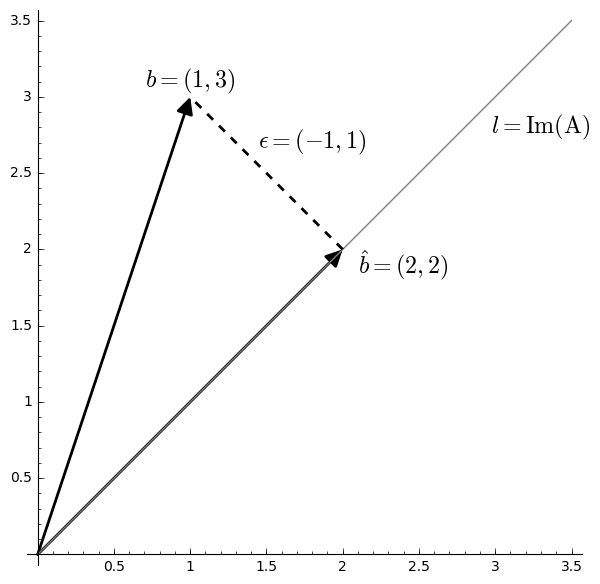

Or we could think about this problem geometrically. In order for there to be a solution to \(A x = b\), the vector \(b\) has to reside in the image of \(A\). The image of \(A\) is the span of its columns, which is all vectors like \(c(1, 1)\) for a scalar \(c\). This is just the line through the origin in the picture above. But \(b = (b_1, b_2)\) is not on that line if \(b_1 \neq b_2\), and so instead we minimize the distance between the two with its orthogonal projection \(\hat b\). The error or residual is the difference \(\epsilon = b - \hat{b}\).

Comments powered by Talkyard.